Don’t name your robo-adviser

Robo-adviser and avatars are becoming increasingly more common in the banking world. The most common form is digital advisory platforms, but banks such as UBS in Switzerland have gone a step further and created an avatar of their Chief Economist Daniel Kalt that can give advice to clients in local branches. Full disclosure, Dani and I worked together for many years at UBS and he is really a great economist and a great guy. But would I trust Dani Kalt the avatar to give me good advice? I am not sure.

And it seems I am not alone. Frank Hodge and his colleagues have run a series of experiments where students and members of the public were asked to take advice from either a human financial planner or a robo-adviser. The trick? In the first experiment, the 108 students who were asked to take advice were split into groups. For each type of adviser (robo or human), the students were either given the name of the adviser or not and when receiving advice from a robo, the robo either has no name or was named Charles.

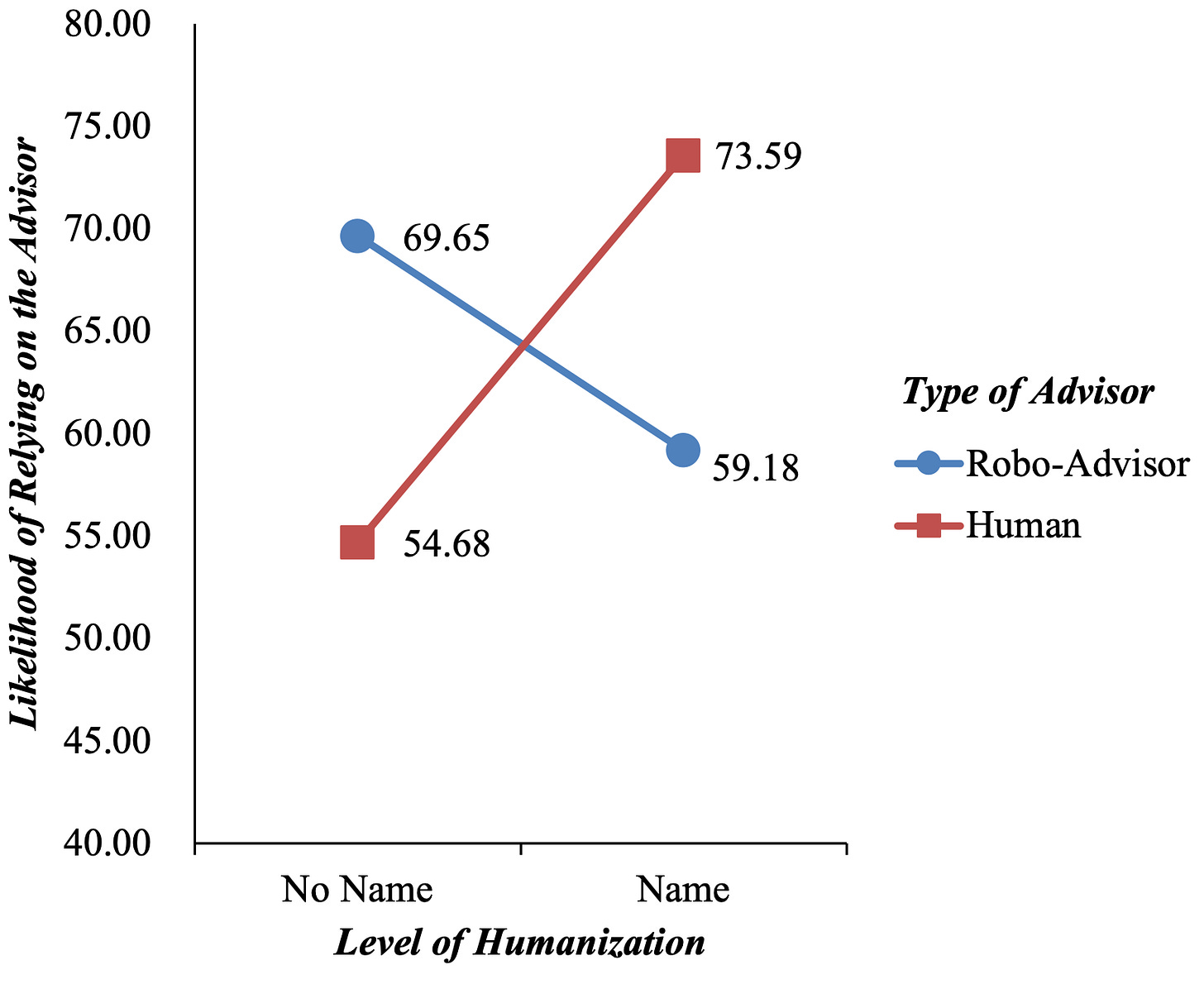

When confronted with a human adviser, the participants in the experiment were more likely to take advice if the human gave his name. That is what you would expect. Humans are social animals and just knowing the name of your adviser builds a form of intimacy. In fact, getting advice from a human without knowing the name of the adviser (e.g. advice given from an anonymous call centre agent) is almost a violation of trust and makes you less likely to take that advice. It makes sense then that robo-advisers that have a name should also be trusted more by clients. Yet, what the experiment found was that it was the other way round. People trusted an anonymous robo-adviser more than a “humanised” robo with a name.

Likelihood of taking advice from human and robo-adviser

Hodge et al. (2020).

In a second experiment, the researchers tried to figure out what drives this counterintuitive result, and it seems to be the perceived complexity of the task at hand. If students were presented with a relatively simple task like rebalancing the portfolio or not, they were more likely to follow the advice of a named robo-adviser than an unnamed robo. However, when the tasks got more complex, the reverse happened. Nobody seems to really know why that is the case, but it seems that if we are dealing with complex tasks, we want to work either with a human adviser we know and trust, or a machine that can give us “objective” information. Mixing these two dimensions by naming the machine is counterproductive.

Obviously, it is unclear if a robo-adviser that is even more humanised than being named like the avatar experiment mentioned above may reverse this effect again. But my experience in working with digital avatars is generally more unease than with Siri or Alexa. Thus, I am going out on a limb here and would say that the efforts to make robo-advisors more human are all likely to be counterproductive. I think people don’t have a problem getting advice from a machine. What people have a problem with is feeling cheated. And a humanised robo-adviser simply feels like a cheat.