Your brain on ChatGPT: Part 1

If you know people who are in the teaching profession, you will, by now, have heard about how they think ChatGPT is a disaster. The essays they get tend to be written by ChatGPT and other LLMs, and they feel like students don’t put any work into their assignments. Their main concern is that students simply don’t learn anything if they rely on ChatGPT to write their assignments for them. More optimistic takes claim that it is not ChatGPT and similar tools that make us dumber, but how we use them.

Earlier in the year, Michael Gerlich showed through surveys and interviews that people likely offload heavy thinking work to the AI when they have access to it. This suggests that we use our brains less, which may have adverse effects on our cognitive skills.

Today and tomorrow, I want to dive deeper into a new study that looks at the brain activity (measured via EEG) as 54 volunteers wrote essays about a variety of topics. The trick: 18 participants were allowed to use ChatGPT, 18 were allowed to use Google, and 18 were not allowed to use any tools and had to complete the task independently, relying solely on their knowledge.

Admittedly, the main weakness (and it’s a significant one) is the low number of test subjects which may lead to erroneous results, but keeping that in mind, we can at least get some clue if ChatGPT is indeed making us dumber and what is going on in our brains when we use these tools.

The study is incredibly comprehensive (the paper has more than 200 pages) and looks at the performance of the test subjects from a variety of angles. But to keep it easy, I will focus on a couple of simple-to-understand results from the experiments today, and tomorrow, focus on the brain scans performed during the experiment and what we can learn from these results about our brains and how to use ChatGPT.

Some of the most striking results came when test subjects were asked to recall what they wrote in their essays. Remember, all 54 test subjects were asked to do the same thing: Choose one of ten topics and write an essay about the question posed (just like you would do in school or at university). But one third of the participants could use ChatGPT, one third had access to a search engine, and one third were working without any help.

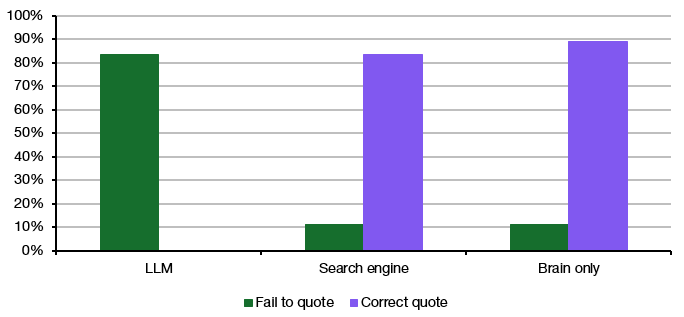

After they were done with their essays, they had to do a few physical and mental exercises before they were asked by a supervisor about the essays they wrote a couple of minutes earlier. Here is how many of them were unable to quote anything from their essays and how many were able to quote entire sentences correctly from the essays they wrote.

Essay recall among participants

Source: Kosmyna et al. (2025)

To me, it is devastating to see that five out of six people who were allowed to use ChatGPT to write an essay could not recall anything they wrote in their essays. And not one of the test subjects could accurately remember a sentence ‘they wrote’. This is in stark contrast to people who used Google or had no tools to help them with their essays. These people were on average much more likely to recall sentences they wrote in their essays because, well, they actually wrote an essay. It seems as if the people who had access to ChatGPT prompted the AI to write the essay for them and then didn’t even bother to read the result before they handed it in.

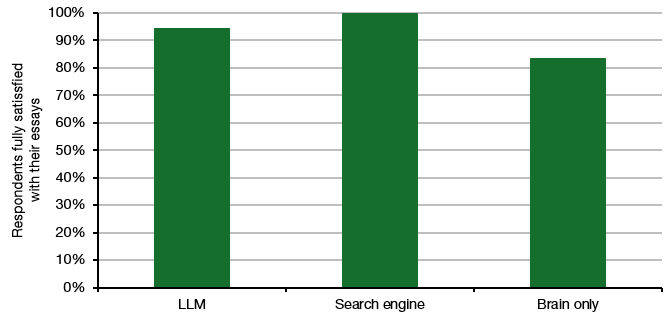

Yet, if you ask the participants how satisfied they were with the quality of their essays, the people who used ChatGPT or Google were, on average, more satisfied with the work they produced than the people who had no tools to help them.

Self-reported satisfaction with essays

Source: Kosmyna et al. (2025)

To assess whether the essays were indeed better when written with the help of ChatGPT or Google, the researchers also asked both real-life teachers and a specially trained AI to assess the quality of the essays. Note that all essays were blind-reviewed, so neither the teachers nor the AI had any idea whether the essays were written with the help of a tool, which tool was used, or whether no help from tools was provided at all.

They found that the AI judge tended to rate the essays written with the help of ChatGPT as of better quality than essays written without any help. But human teachers disagreed. To quote from the paper: “Human teachers pointed out how many essays used a similar structure and approach. In the top-scoring essays, human teachers were able to recognise a distinctive writing style associated with the LLM group.” In short, the content may be alright, but the essays sound artificial to a real human when written with ChatGPT. And many human readers simply don’t like this artificial cookie-cutter style that ChatGPT and other LLMs generate.

This is extremely interesting. Even though you apologize for the small sample size involved, that complete lack of recall amongst the LLM group is indeed startling. I think it might be akin to using pen and paper, or even typing into a laptop, to take notes instead of just letting voice recognition transcribe and summarize a management meeting; a bit of work is key to retention and learning.

You've touched upon something that I believe will continue to trip up complete adoption of AI: The Uncanny Valley. Originally applied to the "ick" factor one feels with humanoid robots https://en.wikipedia.org/wiki/Uncanny_valley , that "distinctive writing style" the teachers could start to recognize is already manifest in AI-created images, videos, and music. The conventional wisdom at present is that "well, just wait until the technology improves", but I think society's going to reward and advantage those who have a good nose for things ... sort of like being able to tell if a luxury watch or a handbag are fake from several paces away.

That said, I've heard that students are already a step ahead and deliberately inserting misspellings and grammatical errors into their LLM-generated schoolwork. Ironically, this might have some of them decide to just create their own prose themselves.

Anyway, with any luck, this'll get society away from overdependence on written tests, and ressurect oral exams, which are the ultimate "I know it when I see it" litmus tests for teachers https://en.wikipedia.org/wiki/I_know_it_when_I_see_it .

Reminds me of a post by Scott Cunningham [1] which contained a few tell-tale signs of AI-written papers. I found two quite notable:

- AI written text generally lacks a thesis and a point of view, and

- Humans write papers whose length of paragraphs varies greatly - AI‘s don’t usually do that, unless you make it do so more actively.

Which also means that if you engage more with the AI when prompting it to write the text, then this should lead to better albeit different forms of retention:

A while ago there was another interesting observation by Venkatesh Rao [2] in relation to some of these studies where he compared people „writing papers with AI“ to managers who instead of writing themselves rather „delegate and supervise writing work of a competent intern“ (And that „prompting well“ in such a context means to know when to chase alpha and „when to let the index run“).

Juniors who jump to that „managerial“ role directly often lack the expertise and experience in managing (and delegating to) other humans properly, which is one element that leads to bad outcomes (As a side note, [3] should be of particular interest to you. Maybe you’ve come across it already. ☺️).

[1] Cunningham, Scott. “How to Tell If ChatGPT Wrote the Students Essays.” Substack Newsletter. Scott’s Mixtape Substack (blog), December 18, 2024. https://causalinf.substack.com/p/how-to-tell-if-chatgpt-wrote-the.

[2] Rao, Venkatesh. “Prompting Is Managing.” Substack Newsletter. Contraptions (blog), June 19, 2025. https://contraptions.venkateshrao.com/p/prompting-is-managing.

[3] Rao, Venkatesh. “LLMs as Index Funds.” Substack Newsletter. Contraptions (blog), April 1, 2025. https://contraptions.venkateshrao.com/p/llms-as-index-funds.