Corporate reports are getting longer and longer, and earnings calls aren’t getting any better either. As investors, we have to wade through this swamp of clutter and legal disclosures to find the actual information. Luckily, chatGPT and other LLM can help us with that while at the same time amplifying signals that are obfuscated by clutter and legalese.

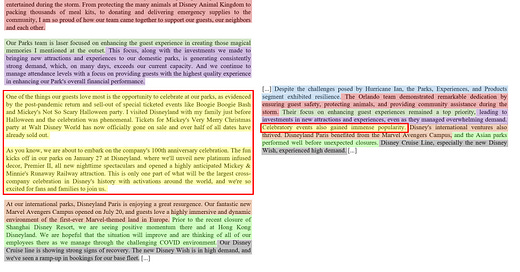

More and more analysts at my firm are using AI to save time. For example, instead of listening to a lengthy earnings call, they let an app transcribe the call and then use AI to summarise the contents. If you don’t plan to ask any questions, that can reduce the time spent on earnings calls dramatically. Take the example below, which shows the transcript of Disney’s Q4 2022 earnings call on the left and the chatGPT summary on the right. It’s quite a difference.

Summarizing earnings calls with chatGPT can reduce bloat

Source: Kim et al. (2024).

This example is taken from new research by researchers from the University of Chicago who used chatGPT 3.5 to summarise 1,790 management discussion and analysis (MD&A) sections in annual reports of 339 US companies and a total of 8,537 conference calls.

They find that the length of the call or MD&A discussion can be reduced by 70-75% without loss of information, which gives you an idea of how much clutter there is in today’s corporate statements. But once you remove the clutter, the sentiment and information content becomes not only clearer but more pronounced. Good news gets amplified while bad news does too. This may explain why companies try to obfuscate bad news by writing long MD&A statements. But it cannot explain why, in many instances, good news is also obfuscated by noise. This kind of clutter makes markets less efficient and reduces the impact of the information provided.

Using the summarised, compact versions of MD&A statements, the authors were able to better identify companies with positive and negative news and as a result, got larger performance differences between the companies they identified as presenting good vs. bad news.

Performance differences are more pronounced when summarised MD&A statements are used

Source: Kim et al. (2024).

The same is true for earnings calls, where a summary of the call leads to a more pronounced difference in selecting companies with good and bad news, which in turn, can enhance performance.

Performance differences are more pronounced when summarised conference calls are used

Source: Kim et al. (2024).

There may be a use case yet for LLMs. I read 10 prospectuses over the last weekend. Roughly 1,000 pages. Probably 80% boilerplate on "risks", etc. I can see a case (and I think I'll write a prompt) to read prospectuses, identify common boilerplate and then note that in the summary as essentially a footnote, unless there's any risk outside the standard.

I think you could do the same for privacy policies and for terms of use in online applications.

Thanks for kicking this off in my head!

Interesting post!

So, in the future, if everyone is using the AI tools ( I do not think there is a significant difference between ChatGPT, Claud, and Gemini, especially when it comes to summarization), we will see the stock go in one direction or another more than when humans are summarizing as they will not have the same summary just because everyone will get a very similar summary and may react similarly. Is this possible, or is it happening on a small scale right now, or am I overanalyzing the situation? What kind of risk does it create to the stability of the market?